Can you give us a brief introduction about your role at Lokalise?

Hi, I’m really glad to be here. I’m looking forward to a very interesting conversation. A little bit about myself. I’m the Engineering Manager for the AI/ML team, which is part of the translations domain. We’re responsible for the AI translations that Lokalise provides. I’m originally from Bulgaria, but based in the UK.

I have a PhD in clinical information extraction, which is an old-school NLP (Natural Language Processing) AI discipline, and I spent about seven or eight years in health tech AI before joining Lokalise.

What were you building in health tech?

Six or seven years ago, early chatbots were emerging and starting to be AI-powered rather than rules-based. One of the things I was doing was extracting information from user queries and making sure we connected them to the right answers or handled them correctly.

Later on, just before ChatGPT became mainstream, I built a tool for medics to summarize their video consultations based on video and audio signals.

So kind of like the notes systems that we have now in Google Meet or Zoom, but specifically for clinicians and with technology that wasn’t as advanced, so it needed extensive tuning.

What are you most excited about now in terms of AI?

Personally, I’m most excited about this new generation of AI-enhanced tools, like Cursor. Cursor is basically an IDE—a development environment for programming—but it’s fully AI-integrated. You can prompt your way through developing software, which is a fundamentally different approach because tasks that used to take a lot of time to type and execute now happen much faster. There are trade-offs: sometimes the AI isn’t perfect, but it’s fast.

And this is a tool that you can apply to other things like copywriting and all kinds of like text based activities.

I see the new generation of AI-enhanced tools changing how people work and how much work we can accomplish. We’re going to be very bad at it initially, but eventually we’ll improve, and the models will get better too.

So that’s pretty exciting for me at the moment.

Is Cursor a tool that non-technical people like myself could use and create apps and software?

Cursor probably isn’t there yet for non-technical users. There’s another tool called Lovable that’s more high-level and accessible. Cursor works best when you know exactly what you want to build and it helps you build it, whereas Lovable enables everyday people to build small apps, maybe using templates.

This is basically “vibe coding”. Instead of writing code and thinking through everything it does, you look at it and get a vibe for whether it’s roughly what you want. If you had to think through everything that gets written, that’s going to take too long, so it’s easier to get a vibe and test it.

Is there one trend Is there one trend that you think one AI trend that you think is overhyped and maybe one that’s not getting enough love at the moment?

This is interesting because for me, it’s actually the same technology. The cornerstone of current AI utilization is single-shot learning. Our AI models are really good at learning how to do something when you give them just one example. This is incredibly useful because you can interact with AI in very specific ways. You can tell it to write a Product Requirements Document (PRD) exactly how you want it, not just a generic version.

And it delivers a lot of value through techniques like retrieval augmented generation (RAG), where you provide context and small examples of exactly what you want. But here’s the overhyped part: people think this approach will lead us to AGI (Artificial General Intelligence) or solve the path to superintelligence. I think basically people are wrong. This is not how we’re going to get there.

What’s missing is memory. This is both the curse and blessing of current AI, you have to explain everything from scratch every time.

If you want to do something twice, you need to provide the same context and instructions for that single-shot learning to happen again.

This is not the case for humans: if you have an intern and they, show them how to write a PRD, maybe they need a few tries, but a week later they’re writing their own PRDs without you explaining the process again.

With AI, we always have to provide exact instructions, and there’s the indeterministic nature that makes it more unpredictable.

And you mentioned RAG. Is that still considered single shot, even though it’s kind of almost retrieving information itself?

I mean, we’re kind of simulating what’s really missing: memory.

AI models don’t have the ability to save something, learn it, and persist. And what companies do is basically kind of emulate that through an external system that acts as memory. And RAG is kind of that.

We save things like past translations and provide relevant glossary terms for current translations. This serves as what would be experience for humans: past translation work and expertise.

We’re giving the right context so AI can construct something resembling past experience from information.

How would you think these AI evolutions are changing the health tech landscape?

There’s huge opportunity for change, but it’s also very slow to adopt new technology due to inherent conservatism. When I was in health tech, I saw many things we could potentially do, but ultimately it’s a very human-based system.

I don’t think we’ll see massive change soon. AI in health tech will always lag behind. Unless the system changes fundamentally, we won’t be able to help as much with AI because care is fundamentally human. The healthcare domain is so optimized and the humans in it are incredibly skilled, so it’s difficult to compete with them.

I’d say we probably won’t see big impact in the next five to ten years. There will be gradual changes and improvements.

Governments need to centralize data better and relax governance slightly to leverage AI effectively in health tech.

Eventually we’ll need to rethink how hospitals work and how health tech is applied, but these will be slow processes. You don’t want rapid changes in healthcare because it has profound effects on human healthcare and lives.

And in localization for healthcare, do you see AI playing a big role?

I actually did localization for one of my previous companies. We approached it similarly to how we do it now at Lokalise: lots of machine translation and fine-tuning models for good results. We were localizing into Chinese, which was particularly difficult since we had no native speakers and only one expensive translator who could handle healthcare data properly.

Healthcare data isn’t regular content: nuances matter even more for the machine learning models we train.

Healthcare companies will have similar challenges now, but it’ll be much easier to handle localization efforts. On the other hand, healthcare organizations like hospitals trying to localize their apps or web portals will face challenges similar to software localization in any domain.

What’s common for both is that you want humans to review the translation and ensure it’s correct. I don’t think that will change in the foreseeable future.

In healthcare, there are particularly strong reasons to maintain human oversight, for both regulatory and branding reasons.

Have you seen an increase in adoption of AI in the healthcare industry?

Yes, overall it’s happening across many fronts. Everyone’s trying, but the percentage truly adopting AI is actually quite small. In healthcare, especially in Europe, you have strict regulations about medical devices, and most AI applications related to decision-making are classified as medical devices.

Many companies try to be health tech AI-related, but few actually manage to employ AI legally and make a real difference. I think that number is going to grow, but it’s going to be a steady growth. It’s not going to be an explosion.

⚡ Lightning round

What’s one thing AI still can’t do?

Well, remember how to do what it just did.

How do you imagine AI in daily life 10 years from now?

It’s difficult to imagine, but I think it’ll be everywhere. In 10 years, we’ll be asking ourselves the questions we should be asking now: How much should we adopt AI? What are the safety procedures? I think we’ll be having the crisis we’re having now with social media, but with AI, which doesn’t sound great, but maybe we’ll handle it better this time.

If you could give AI a voice, what celebrity would it be?

Okay, so I think Adam said Emma Thompson, which I stand by. But if I have to give my version of this would be a synthetic voice. So I think the most befitting is just like if we could fully synthesize a voice by just kind of making it totally AI and not like someone else.

If AI could learn one human skill overnight, what would it be or what should it be?

I won’t say memory this time, though it should be memory. If it had memory, it would need genuine empathy and genuine creativity. Those are the three things missing right now: no memory, no learning from experience, no empathizing, and no creativity, just repetition of things we’ve already seen.

What’s one localization task that you’ll only trust a human with?

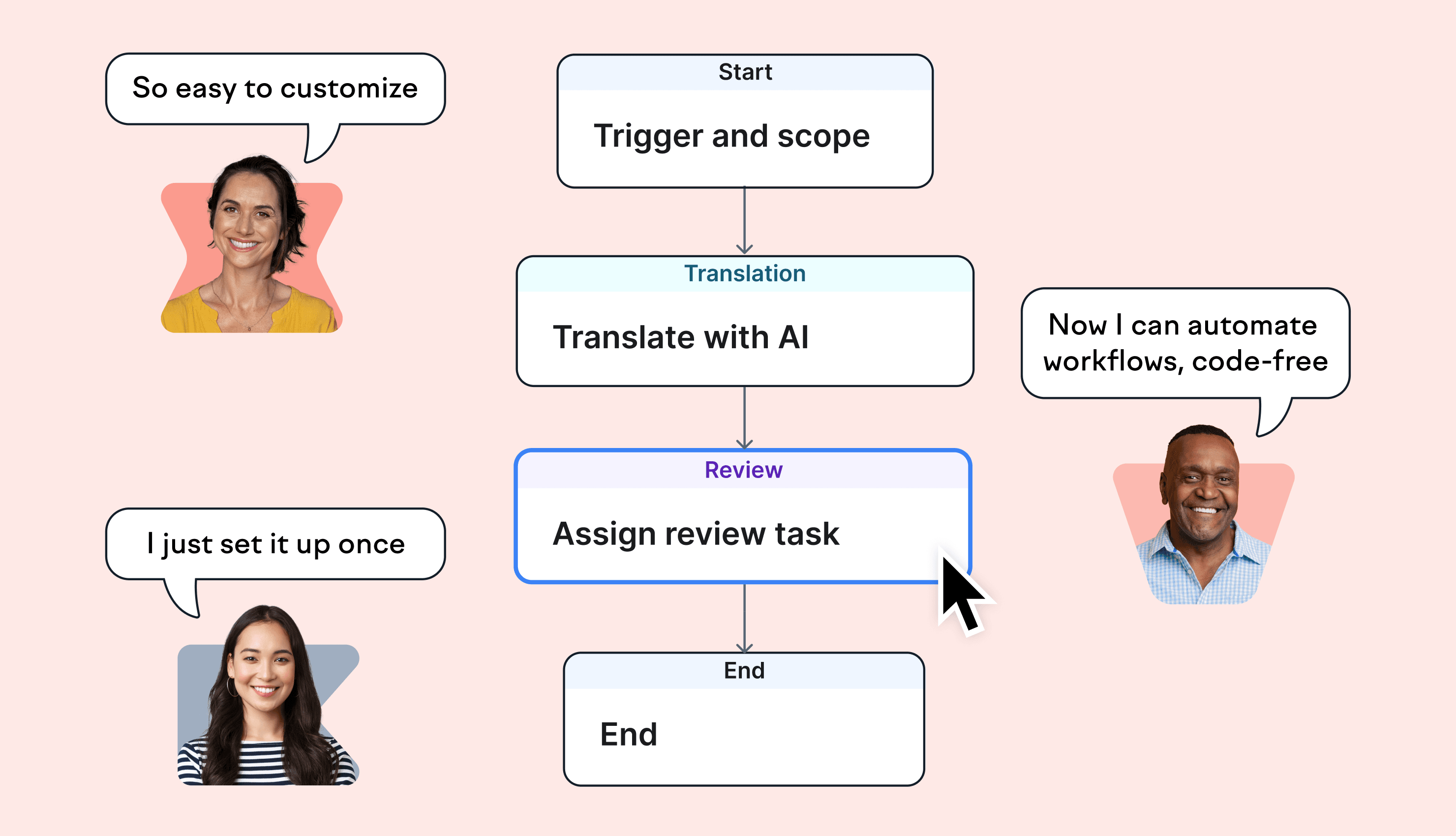

I’m from the team that would argue the opposite, honestly. I think review is still very important. Human review is something we need to have in many cases. The extent depends on the use case, but I think it’s valuable both for the AI and the end result to have humans provide final review.