Limitations and challenges of NMT

Neural machine translation can produce fluent-sounding results that are sometimes inaccurate or miss the mark from a cultural perspective. It also relies heavily on large, high-quality datasets, and these are not always available for less common languages.

It can miss the deeper meaning

NMT is good at surface-level translation, but it often stumbles on things like sarcasm, humor, or more specific cultural references. So while it might nail the words, it can totally miss the overall tone.

It's heavily reliant on the available data

These models learn by example, which means they need huge amounts of high-quality, bilingual data. For common language pairs, that’s usually not a problem. But for languages that are not as used, the system might struggle simply because it hasn’t seen enough examples.

It sounds confident (even when it's working)

One of the biggest issues with NMT is that it can produce fluent, natural-sounding translations that are just… wrong. That’s risky if you’re dealing with sensitive content like legal contracts, medical info, or safety instructions.

It doesn't truly "understand" the way humans do

NMT models aren’t really “thinking” or reasoning. They’re just predicting the most likely next word based on patterns. This means that they don’t actually understand the world or the meaning behind the text the way a human does.

It can reflect biases in data

If the training data includes biased or stereotypical language (and it often does), the model can repeat those same biases in its translations. This is still a big challenge in the context of fairness and ethical use of neural machine translation technology.

Popular use cases for neural MT today

Neural machine translation is used in everything from translating websites and product descriptions, to powering real-time translation in apps like Google Translate. It can help you localize content faster and even transform the way you approach your go-to-market activities.

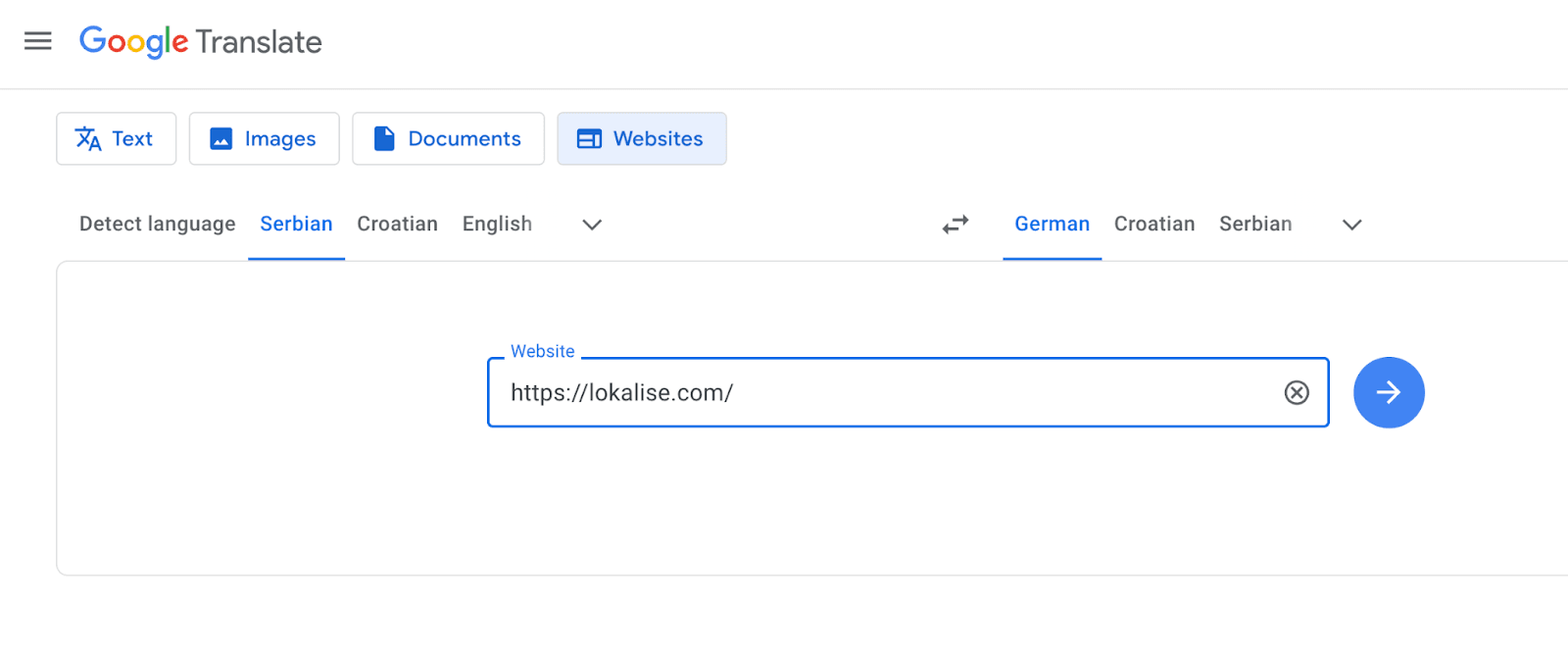

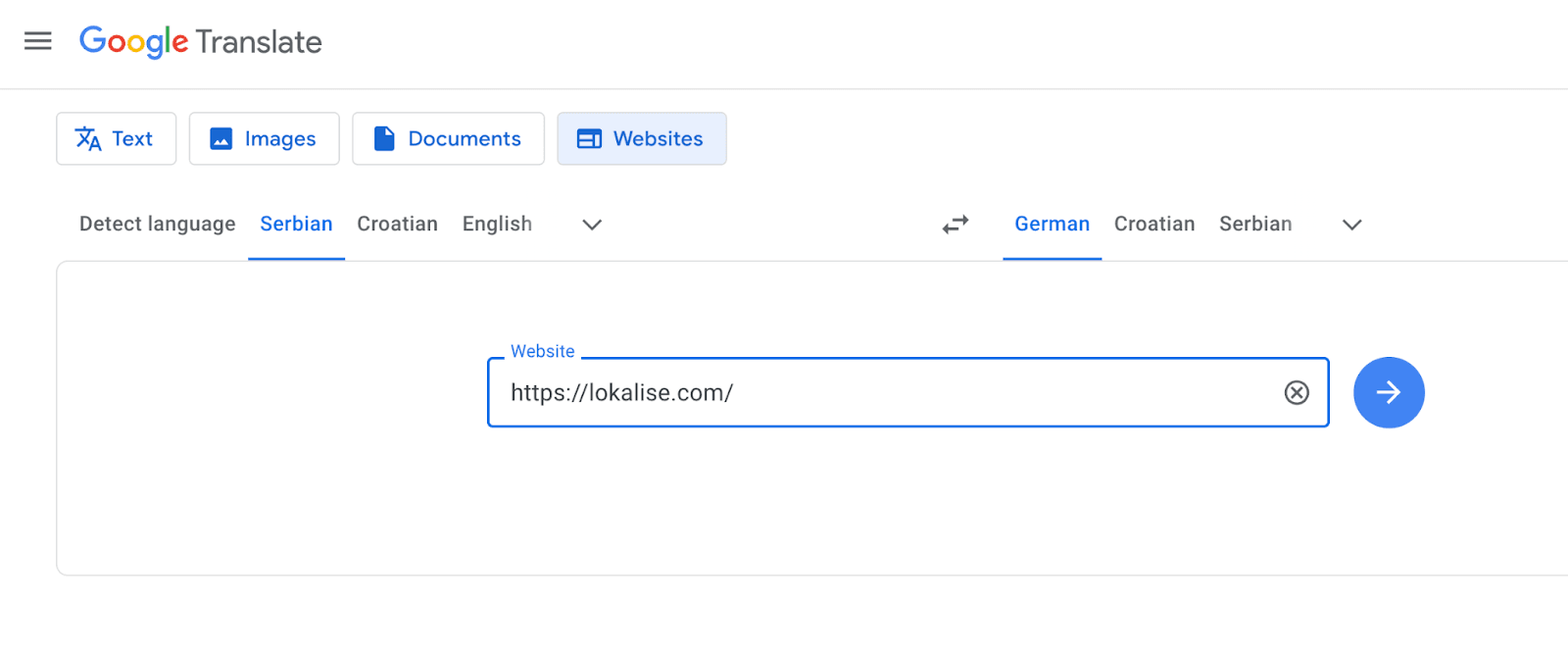

Let’s take a look at how you can use Google Translate for website translation. It’s free to use for basic use. So let’s try it for the Lokalise website:

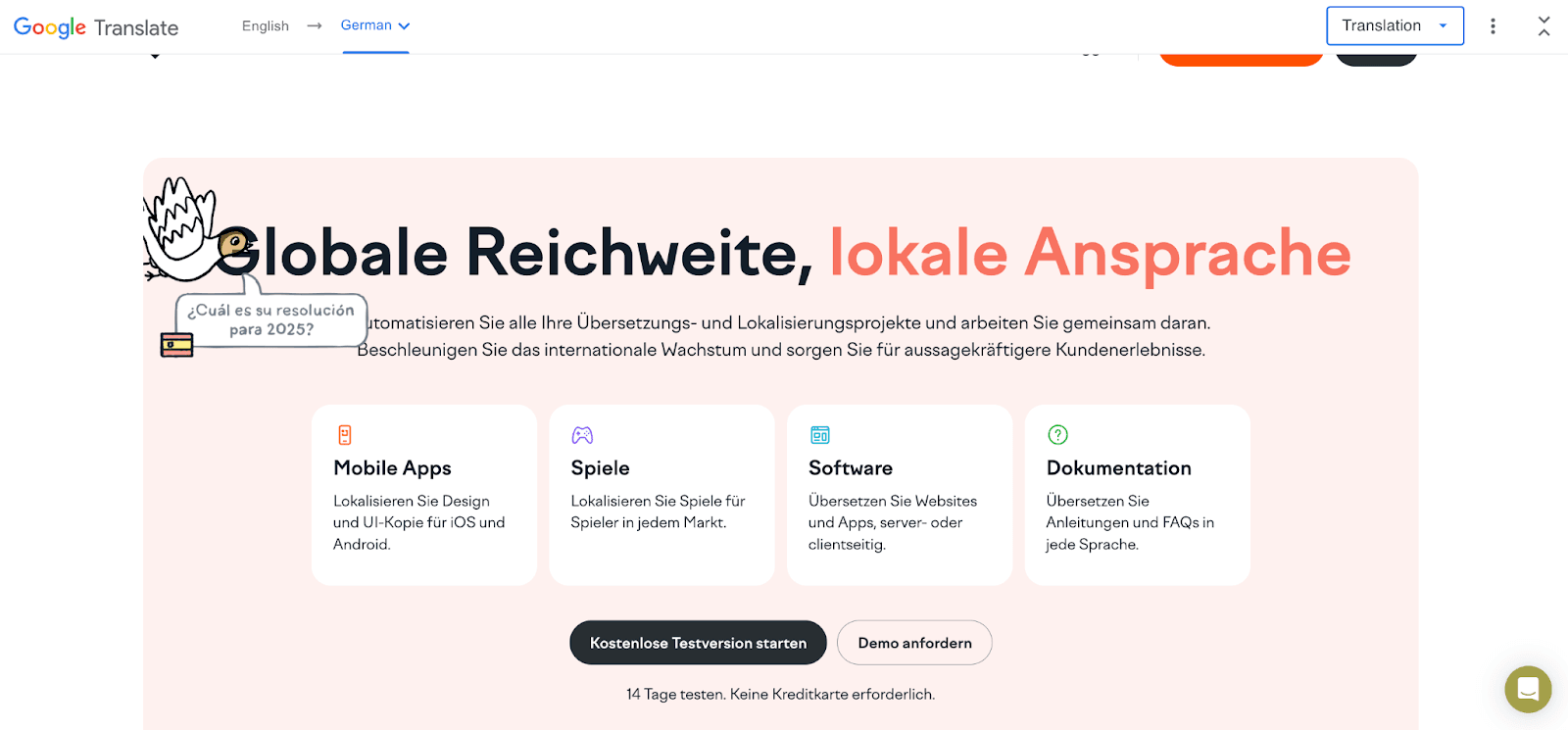

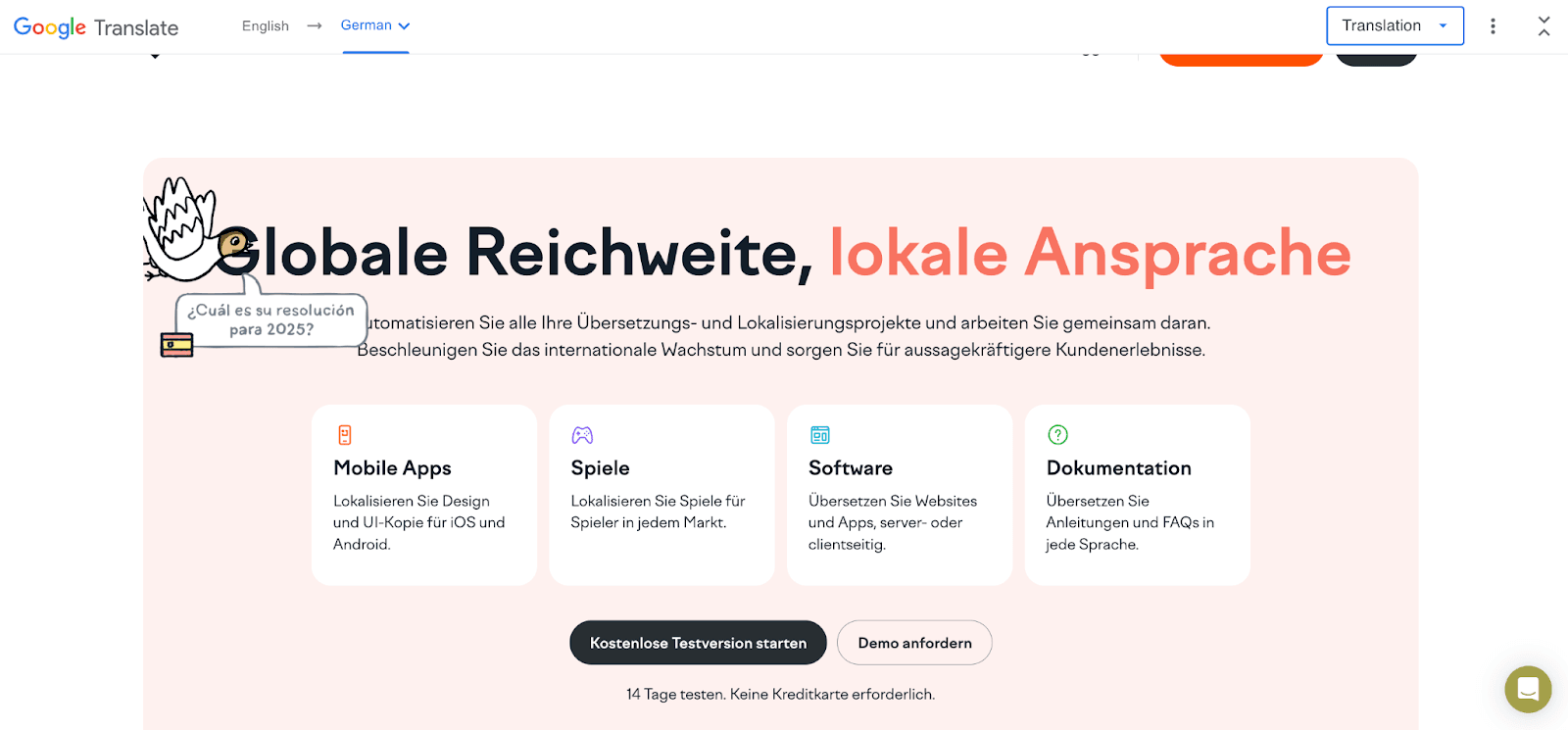

Here’s how the website appears in German through the Google Translate preview.

While the translation quality is decent, human intervention is needed to fix design issues and improve accuracy. You can also see that the copy doesn’t fit into the design space nicely.

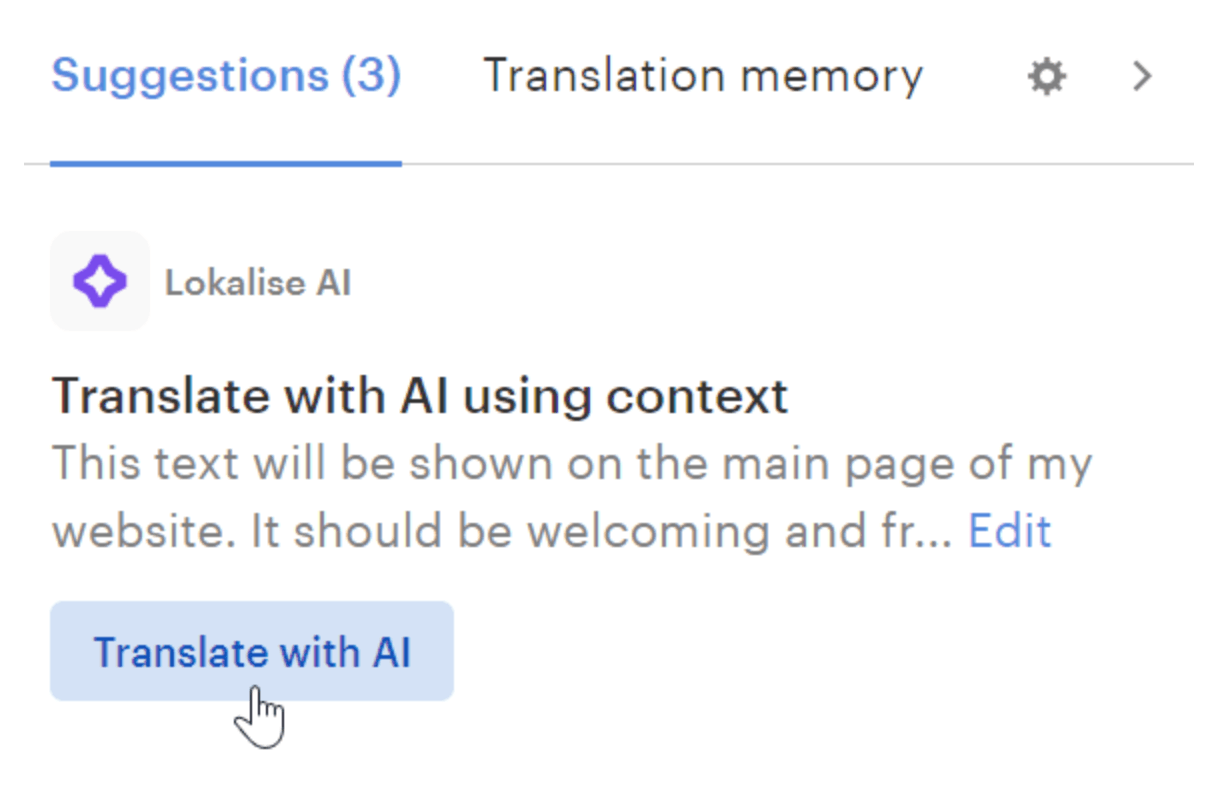

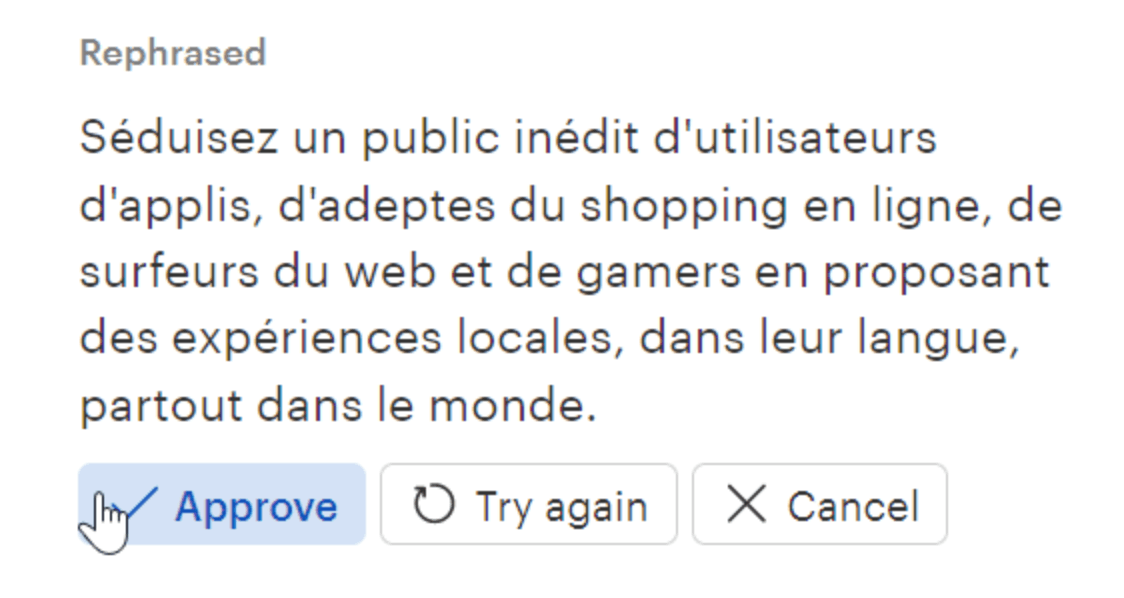

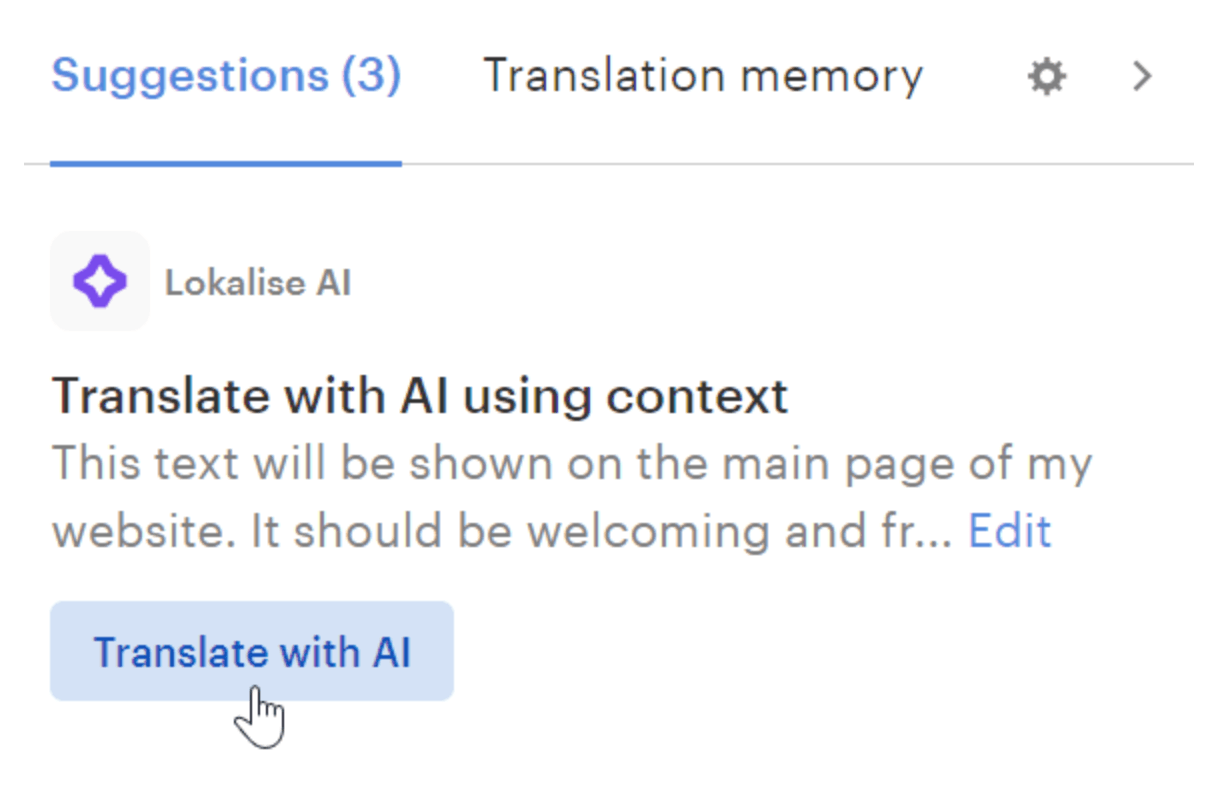

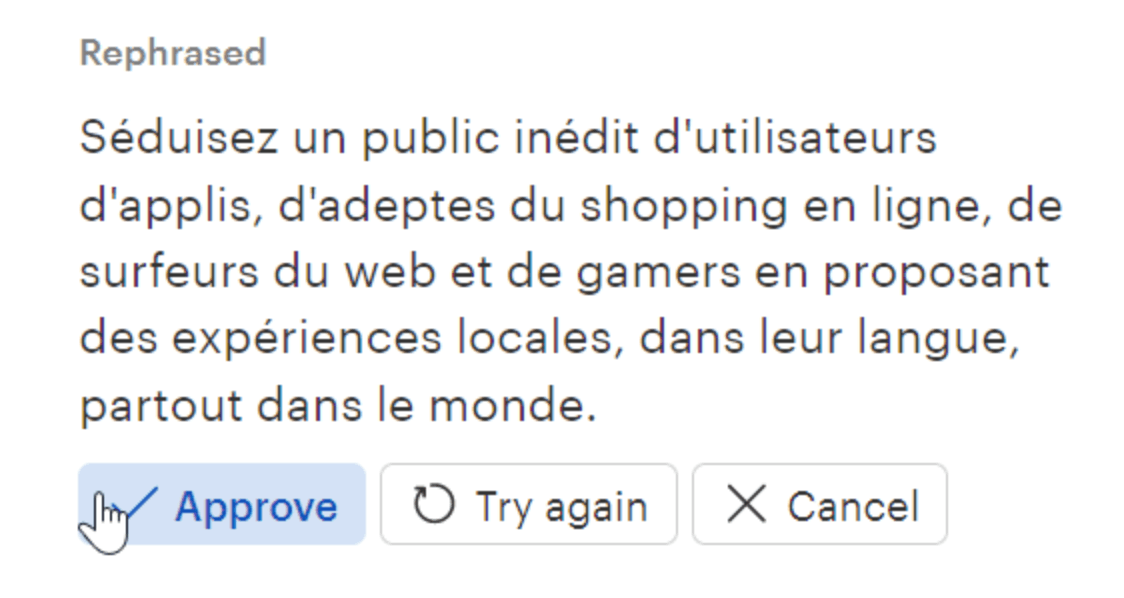

In contrast, more advanced solutions like Lokalise AI allow you to feed the model with context, which leads to more accurate translations:

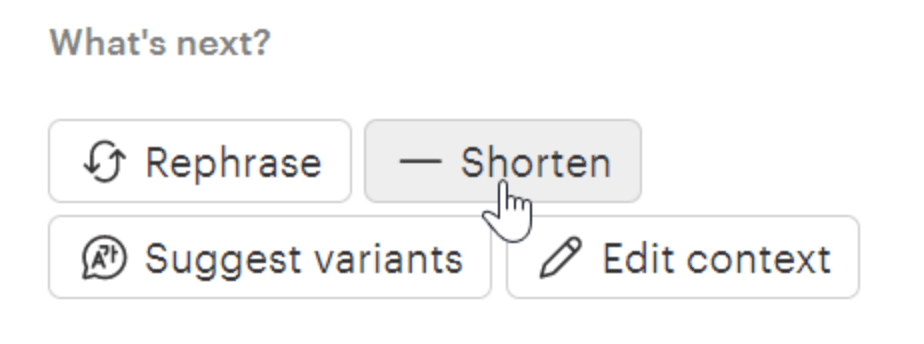

You can then approve the translation, ask the AI to try again, and manually post-edit the translated text.

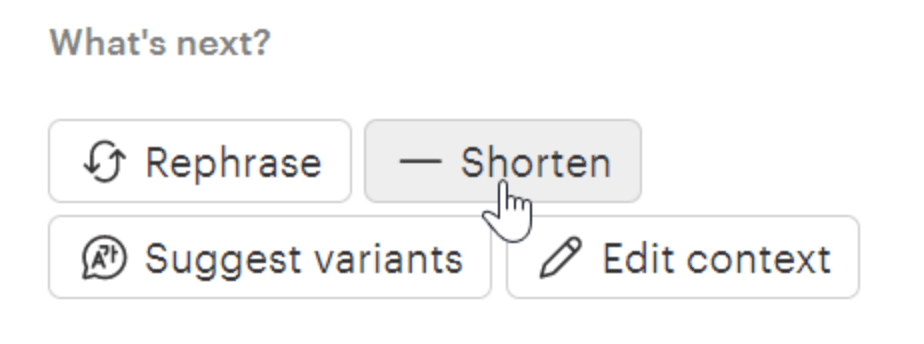

It’s also possible to ask for a shorter version and iterate with AI so that you actually get a translation that works for your use case.

Want to learn more? Visit Lokalise AI to discover how to translate 10x faster, without compromising quality.

Real-time trasnlation in apps

Apps like Google Translate and Microsoft Translator use NMT to instantly translate speech, text, or images. You might be navigating a foreign city or trying to communicate with someone in another language, and this is where machine translation tools come in handy.

Multilingual customer support

Many companies use NMT to translate support tickets, chat messages, and help center content. This helps them serve customers in multiple languages without needing a large team of human agents for each one.

E-commerce and product localization

Online stores use neural translation to localize product listings, descriptions, reviews, and ads. It allows them to reach global markets faster and offer a more personalized experience to international shoppers.